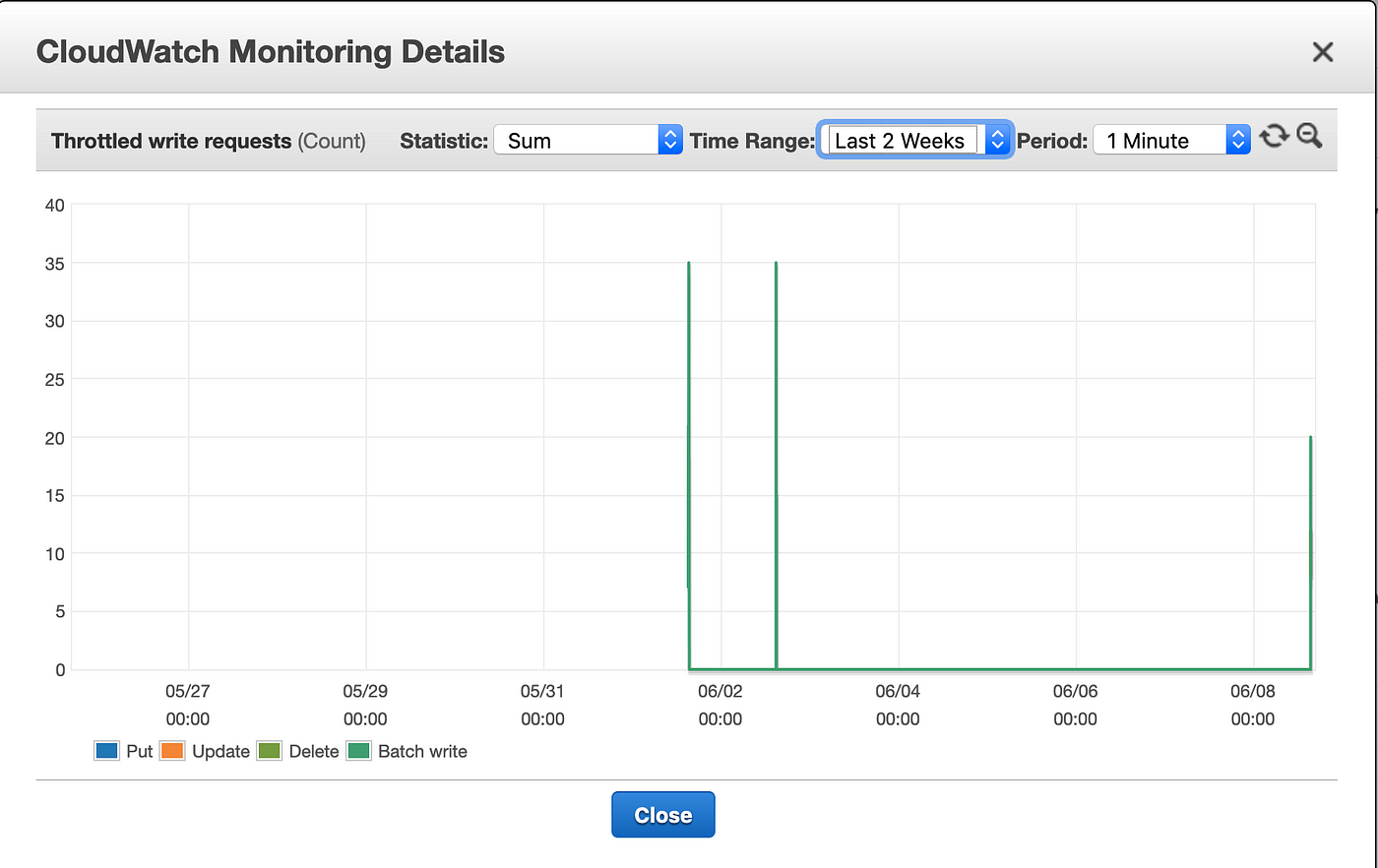

Here's the full file what I'm using to insert into DynamoDB const aws = require("aws-sdk") Ĭonst client = new (` How to write more than 25 items/rows into Table for DynamoDB?.In addition, I've read that Auto-Scaling doesn't automatically kick in, and Amazon will only resize the Capacity Units 4 times a day. I'm kind of stuck as I don't want to be randomly increasing the WCUs for short periods of time.

I currently have 5 WCUs and 5RCUs (are these too small for these random-spiked operations?). If I promisify the call and await it, I'd get a Lambda timeout exception (Probably because it ran out of WCUs). Currently using Node 10 and Lambda), and nothing seems to happen. I've tried calling the unlimited number of batchWrite by just firing it and not awaiting them (Will this count? I've read that since JS is single threaded the requests will be handled one by one anyways, except that I wouldn't have to wait the resposne if I don't use a promise.

Meaning that we could call unlimited number of batchWrite and increase the WCUs.

We know that DynamoDB limits the inserts of 25 items per batch to prevent HTTP overhead. I've tried searching around Google and StackOverflow and this seems to be a gray area (which is kind of strange, considering that DynamoDB is marketed as an incredible solution handling massive amounts of data per second) in which no clear path exists. I'm currently using AWS Lambda and I'm getting hit by a timeout exception (Probably because more WCUs are consumed than provisioned, which I have 5 with Auto-Scaling disabled). They don't need to be read immediately (Let's say, in less then 3 - 5 seconds). When a user is created, these rows need to be generated and inserted. I'm currently in the process of seeding 1.8K rows in DynamoDB. Edit x1: Replaced the snippet with the full file

0 kommentar(er)

0 kommentar(er)